This paper is available on arxiv under CC 4.0 license.

Authors:

(1) Anh Nguyen-Duc, University of South Eastern Norway, BøI Telemark, Norway3800 and Norwegian University of Science and Technology, Trondheim, Norway7012;

(2) Beatriz Cabrero-Daniel, University of Gothenburg, Gothenburg, Sweden;

(3) Adam Przybylek, Gdansk University of Technology, Gdansk, Poland;

(4) Chetan Arora, Monash University, Melbourne, Australia;

(5) Dron Khanna, Free University of Bozen-Bolzano, Bolzano, Italy;

(6) Tomas Herda, Austrian Post - Konzern IT, Vienna, Austria;

(7) Usman Rafiq, Free University of Bozen-Bolzano, Bolzano, Italy;

(8) Jorge Melegati, Free University of Bozen-Bolzano, Bolzano, Italy;

(9) Eduardo Guerra, Free University of Bozen-Bolzano, Bolzano, Italy;

(10) Kai-Kristian Kemell, University of Helsinki, Helsinki, Finland;

(11) Mika Saari, Tampere University, Tampere, Finland;

(12) Zheying Zhang, Tampere University, Tampere, Finland;

(13) Huy Le, Vietnam National University Ho Chi Minh City, Hochiminh City, Vietnam and Ho Chi Minh City University of Technology, Hochiminh City, Vietnam;

(14) Tho Quan, Vietnam National University Ho Chi Minh City, Hochiminh City, Vietnam and Ho Chi Minh City University of Technology, Hochiminh City, Vietnam;

(15) Pekka Abrahamsson, Tampere University, Tampere, Finland.

Table of Links

- Abstract and Introduction

- Background

- Research Approach

- Research Agenda

- Outlook and Conclusions

- References

Background

Application of AI/ML has a long history in SE research [16, 17, 18]. The use of GenAI specifically, however, is a more emerging topic. While the promise of GenAI has been acknowledged for some time, progress in the research area has been rapid. Though some papers have already explored the application of GPT-2 to code generation [19], for example, GenAI was not a prominent research area in SE until 2020. Following the recent improvements in the performance of these systems, especially the release of services such as GitHub Copilot and ChatGPT-3, research interest has now surged across disciplines, including SE. Numerous papers are currently available through self-archiving repositories such as arXiv5, and paperwithcode6. To prepare readers for further content in our research agenda, we present in this section relevant terms and definitions (Section 2.1), the historical development of GenAI (Section 2.2) and fundamentals on Large Language Models (Section 2.3)

2.1. Terminologies

Generative modelling is an AI technique that generates synthetic artifacts by analyzing training examples, learning their patterns and distribution, and then creating realistic facsimiles [20]. GenAI uses generative modelling and advances in deep learning (DL) to produce diverse content at scale by utilizing existing media such as text, graphics, audio, and video. The following terms are relevant to GenAI:

• AI-Generated Content (AIGC) is content created by AI algorithms without human intervention.

• Fine-Tuning (FT) updates the weights of a pre-trained model by training on supervised labels specific to the desired task [21].

• Few shot training happens when an AI model is given a few demonstrations of the task at inference time as conditioning [21].

• Generative Pre-trained Transformer (GPT): a machine learning model that uses unsupervised and supervised learning techniques to understand and generate human-like language [22].

• Natural Language Processing (NLP) is a branch of AI that focuses on the interaction between computers and human language. NLP involves the development of algorithms and models that allow computers to understand, interpret, and generate human language.

• Language model is a statistical (AI) model trained to predict the next word in a sequence and applied for several NLP tasks, i.e., classification and generation [23].

• Large language model (LLM) is a language model with a substantially large number of weights and parameters and a complex training architecture that can perform a variety of NLP tasks, including generating and classifying text, conversationally answering questions [21]. While the concept LLM is currently widely used to describe a subset of GenAI models, especially out on the field and in the media, what exactly constitutes ’large’ is unclear and the concept is in this regard vague. Nonetheless, given its widespread use, we utilize the concept in this paper as well, acknowledging this limitation.

• Prompt is an instruction or discussion topic a user provides for the AI model to respond to

• Prompt Engineering is the process of designing and refining prompts to instruct or query LLMs to achieve a desired outcome effectively.

2.2. History of GenAI

To better understand the nature of GenAI and its foundation, we present a brief history of AI in the last 80 years:

1. Early Beginnings (1950s-1980s): Since 1950s, computer scientists had already explored the idea of creating computer programs that could generate human-like responses in natural language. Since 1960s, expert systems gained popularity. These systems used knowledge representation and rule-based reasoning to solve specific problems, demonstrating the potential of AI to generate intelligent outputs. Since 1970s, researchers began developing early NLP systems, focusing on tasks like machine translation, speech recognition, and text generation. Systems like ELIZA (1966) and SHRDLU (1970) showcased early attempts at natural language generation.

2. Rule-based Systems and Neural Networks (1980s-1990s): Rule-based and expert systems continued to evolve during this period, with advancements in knowledge representation and inference engines. Neural networks, inspired by the structure of the human brain, gained attention in the 1980s. Researchers like Geoffrey Hinton and Yann LeCun made significant contributions to the development of neural networks, which are fundamental to GenAI.

3. Rise of Machine Learning (1990s-2000s): Machine learning techniques, including decision trees, support vector machines, and Bayesian networks, started becoming more prevalent. These methods laid the groundwork for GenAI by improving pattern recognition and prediction.

4. Deep Learning Resurgence (2010-2015): Deep learning, powered by advances in hardware and the availability of large datasets, experienced a resurgence in the 2010s. Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) emerged as powerful tools for generative tasks such as image generation and text generation. Generative Adversarial Networks (GANs), introduced by Ian Goodfellow and his colleagues in 2014, revolutionized GenAI. GANs introduced a new paradigm for training generative models by using a two-network adversarial framework. Reinforcement learning also made strides in GenAI, especially in game-related domains.

5. Transformers and BERT (2015-now): Transformers, introduced in a seminal paper titled“ Attention Is All You Nee” by Vaswani et al. in 2017, became the backbone of many state-of-the-art NLP models. Models like BERT (Bidirectional Encoder Representations from Transformers) showed remarkable progress in language understanding and generation tasks. GenAI has found applications in various domains, including natural language generation, image synthesis, music composition, and more. Chatbots, language models like GPT-3, and creative AI tools have become prominent examples of GenAI in software implementation.

2.3. Fundametals on LLMs

LLMs are AI systems trained to understand and process natural language. They are a type of ML model that uses very deep artificial neural networks and can process vast amounts of language data. An LLM can be trained on a very large dataset comprising millions of sentences and words. The training process involves predicting the next word in a sentence based on the previous word, allowing the model to “lear” the grammar and syntax of that language. With powerful computing capabilities and a large number of parameters, this model can learn the complex relationships in language and produce naturalsounding sentences. Current LLMs have made significant progress in natural language processing tasks, including machine translation, headline generation, question answering, and automatic text generation. They can generate high-quality natural text, closely aligned with the content and context provided to them.

For an incomplete sentence, for example: “The book is on the”, these models use training data to produce a probability distribution to determine the most likely next word, e.g., “table” or “bookshelf”. Initial efforts to construct large-scale language models used N-gram methods and simple smoothing techniques [24] [25]. More advanced methods used various neural network architectures, such as feedforward networks [26] and recurrent networks [27], for the language modelling task. This also led to the development of word embeddings and related techniques to map words to semantically meaningful representations [28]. The Transformer architecture [29], originally developed for machine translation, sparked new interest in language models, leading to the development of contextualized word embeddings [30] and Generative Pre-trained Transformers (GPTs) [31]. Recently, a common approach to improving model performance has been to increase parameter size and training data. This has resulted in surprising leaps in the machine’s ability to process natural language.

Parameter-Efficient Fine-Tuning

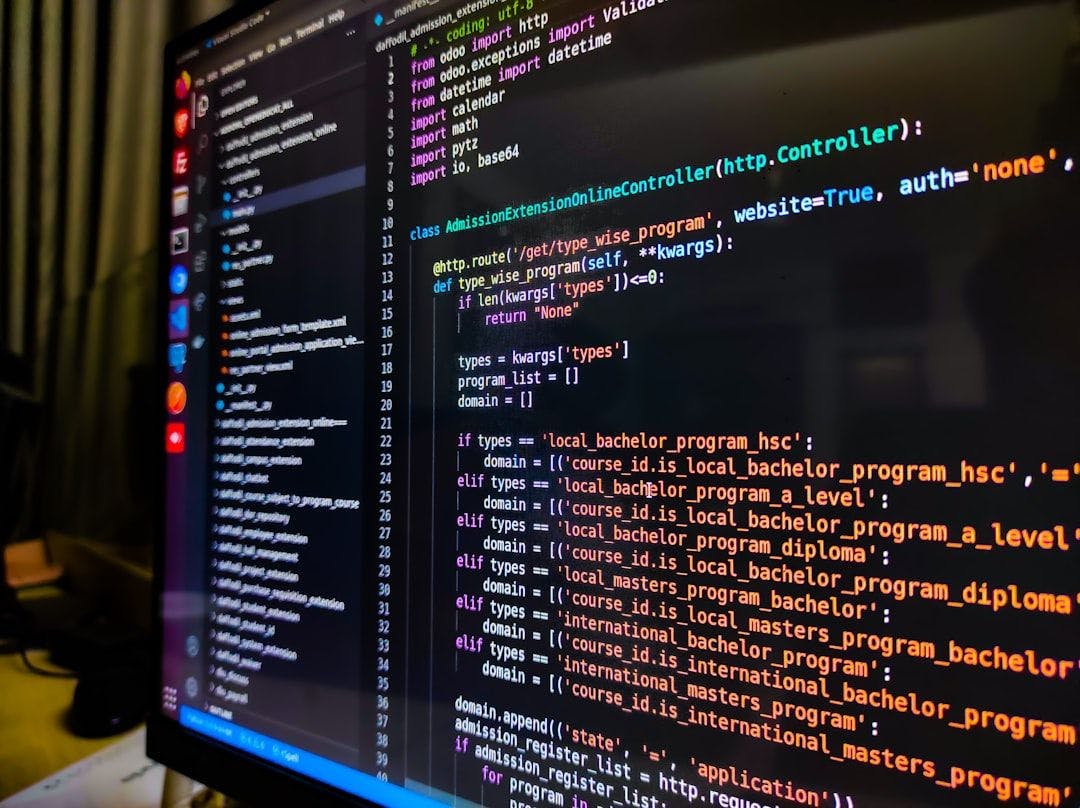

In October 2018, the BERT Large model [32] was trained with 350 million parameters, making it the largest Transformer model ever publicly disclosed up to that point. At the time, even the most advanced hardware struggled to fine-tune this model. The “out of memory” issue when using the large BERT model then became a significant obstacle to overcome. Five years later, new models were introduced with a staggering 540 trillion parameters [33], a more than 1500-fold increase. However, the RAM capacity of each GPU has increased less than tenfold (max 80Gb) due to the high cost of high-bandwidth memory. Model sizes are increasing much faster than computational resources, making fine-tuning large models for smaller tasks impractical for most users. In-context learning, the ability of an AI model to generate responses or make predictions based on the specific context provided to it [31], represents the ongoing advancements in the field of natural language processing. However, the context limitation of Transformers reduces the training dataset size to just a few examples. This limitation, combined with inconsistent performance, presents a new challenge. Furthermore, expanding context size significantly increases computational costs.

![Figure 1: Several optimal parameter fine-tuning methods [34]](https://cdn.hackernoon.com/images/fWZa4tUiBGemnqQfBGgCPf9594N2-ni83ree.jpeg)

Parameter-Efficient Fine-Tuning methods have been researched to address these challenges by training only a small subset of parameters, which might be a part of the existing model parameters or a new set of parameters added to the original model. These methods vary in terms of parameter efficiency, memory efficiency, training speed, model output quality, and additional computational overhead (if any).