Authors:

(1) Kexun Zhang, UC Santa Barbara and Equal contribution;

(2) Hongqiao Chen, Northwood High School and Equal contribution;

(3) Lei Li, Carnegie Mellon University;

(4) William Yang Wang,UC Santa Barbara.

Table of Links

- Abstract and Intro

- Related Work

- ToolDec: LLM Tool Use via Finite-State Decoding

- Experiment: ToolDec Eliminates Syntax Errors

- Experiment: ToolDec Enables Generalizable Tool Selection

- Conclusion and References

- Appendix

ABSTRACT

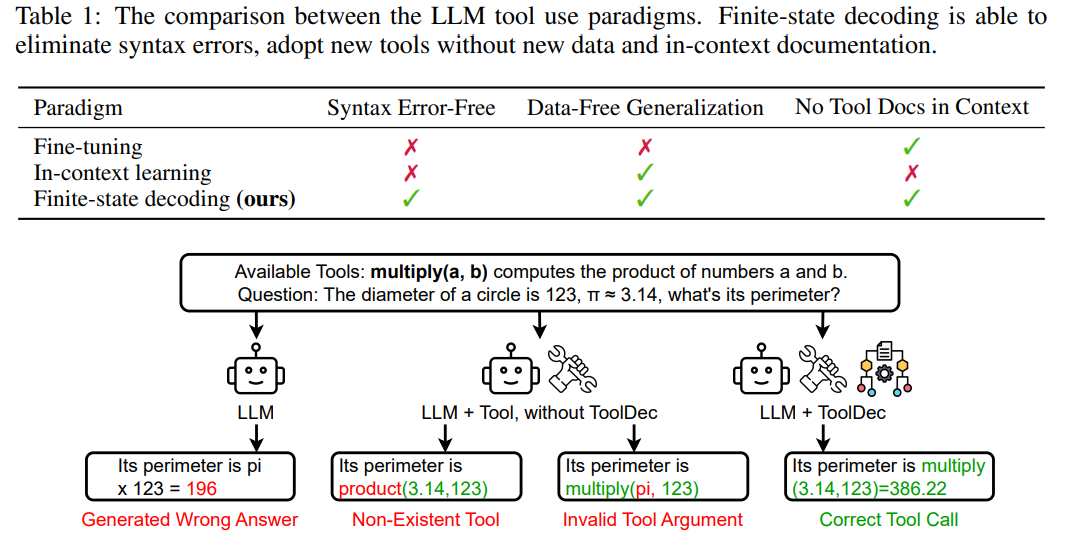

Large language models (LLMs) have shown promising capabilities in using external tools to solve complex problems. However, existing approaches involve either fine-tuning on tool demonstrations, which does not generalize to new tools without additional training, or providing tool documentation in context, limiting the number of tools. Both approaches often generate syntactically invalid tool calls. In this paper, we propose TOOLDEC, a finite-state machine-guided decoding algorithm for tool-augmented LLMs. TOOLDEC eliminates tool-related errors for any tool augmented LLMs by ensuring valid tool names and type-conforming arguments. Furthermore, TOOLDEC enables LLM to effectively select tools using only the information contained in their names, with no need for fine-tuning or in-context documentation. We evaluated multiple prior methods and their TOOLDEC-enhanced versions on a variety of tasks involving tools like math functions, knowledge graph relations, and complex real-world RESTful APIs. Our experiments show that TOOLDEC reduces syntactic errors to zero, consequently achieving significantly better performance and as much as a 2x speedup. We also show that TOOLDEC achieves superior generalization performance on unseen tools, performing up to 8x better than the baselines [1]

1. INTRODUCTION

Augmenting large language models (LLMs) with external tools (Mialon et al., 2023) enables them to solve complex problems. Current LLMs can utilize retrievers (Shen et al., 2023; Gupta & Kembhavi, 2022; Schick et al., 2023), RESTful APIs (Qin et al., 2023; Song et al., 2023), program interpreters (Chen et al., 2022; Gao et al., 2023), and various other tools. The performance of a tool-augmented LLM depends on its ability to make three key decisions—when to use a tool, which tool to use, and how to invoke a tool. Existing approaches learn to make these decisions through fine-tuning or in-context learning.

However, these approaches still generate erroneous tool calls. For example, in-context learning can easily generate non-existent tool names that are not in the tool inventory because non-existent tools may also look plausible as the next token (Song et al., 2023; Qin et al., 2023). Fine-tuned models, though usually call tools by correct names, often pass invalid arguments to the right tool functions (Hao et al., 2023), just as in-context learning does. Furthermore, prior approaches do not generalize to unseen tools well. Fine-tuning approaches need additional training data and further fine-tuning to adopt new tools. In-context learning approaches require tool documentation in the prompts.

To address these issues, we propose TOOLDEC, a decoding algorithm guided by a finite-state machine (FSM) to ensure LLMs invoke tools properly. Our core insight is to explicitly represent states during LLM decoding. Each state is associated with a valid set of tokens corresponding to tool names and tool arguments. TOOLDEC transitions from state to state as decoding progresses. At each decoding step, TOOLDEC does not sample from the entire vocabulary of the language model. Instead, it samples from a subset of tokens allowed by the current state. The FSM that gives guidance to TOOLDEC is constructed from tool documentation and API signature so that the machine precisely represents the grammar of tool calls. In this way, TOOLDEC is able to always generate

syntactically correct tool calls. Figure 1 illustrates that an LLM enhanced by TOOLDEC is able to generate the right function call multiply with precise arguments (“3.14” and “123”) and therefore, it gets the correct result returned by the tool. More examples comparing TOOLDEC and other tool LLMs can be found in Appendix A.3.

Furthermore, TOOLDEC generalizes to new tools that never appeared before much more efficiently. Unlike prior approaches which require fine-tuning or in-context descriptions of new tools, TOOLDEC automatically constructs a finite-state machine from a tool’s API signature (its name and argument types) and adds it to the existing FSM. TOOLDEC is then able to call new tools without fine-tuning or in-context demonstration. While pre-trained language models can generate tool names when prompted to, they often hallucinate plausible tool names that are not in the inventory. TOOLDEC does not do that. In Figure 1, both product and multiply sound plausible for the scenario, but only multiply is a given tool. Since TOOLDEC only calls existent tools, it won’t hallucinate a plausible yet non-existent tool and can rely on the tool names to find the right tool.

The contributions of this paper can be summarized as follows:

• We propose TOOLDEC, a finite-state decoding algorithm to empower LLMs to use tools properly. TOOLDEC enjoys two advantages: its generated tool calls are guaranteed to be syntactically correct and it generalizes to unseen tools efficiently.

• We empirically verify TOOLDEC’s superior performance compared to prior strong baselines on four diverse datasets from different domains. Our extensive experiments show TOOLDEC eliminates all syntax errors and hallucinated tool names, resulting in better accuracy and as much as 50% less inference time. Our results also indicate that TOOLDEC is more than 8x better than baselines on mathematical reasoning with 9 unseen tools and 7x better than knowledge question answering with 204 unseen tools.

This paper is available on arxiv under CC 4.0 DEED license.

[1] We release our code and data at https://github.com/chenhongqiao/tooldec.