This paper is available on arxiv under CC 4.0 license.

Authors:

(1) Dipankar Sarkar, Cryptuon Research and me@dipankar.name

Table of Links

- Abstract and Introduction

- Formal Model - Rollups with Decentralized Common Pool (DCP)

- Atomic composability & ZK-proofs

- Incorporating Zk-proofs

- Application of the Formal Model

- Conclusion and References

2 Formal Model - Rollups with Decentralized Common Pool (DCP)

Addressing the multifaceted nature of blockchain ecosystems, especially those spanning several rollups, demands a structured, rigorous approach to uphold transactional integrity and reliability. To this end, our formal model seeks to methodically dissect and elucidate atomic composability across Ethereum’s multiple rollups.

Our model is an amalgamation of classic distributed system theories and innovative cryptographic practices. We recognize that merely adapting traditional system theories to the blockchain milieu is not sufficient, necessitating a model tailored for the peculiarities of decentralized ledgers, especially within Ethereum’s ecosystem ([9].

Subsequent sections will delve into the intricacies of our formal model, commencing with fundamental definitions. We will then probe into operational dynamics, examining transactional workflows, dependency resolutions, and concurrency nuances. Cryptographic methodologies, particularly zeroknowledge proofs, will be highlighted, underscoring their pivotal role in efficient, confidential transaction validations.

With this formal model, our aspiration is to furnish readers with an encompassing, lucid, and rigorous comprehension of how to establish, sustain, and, if necessary, re-establish atomic composability in environments with multiple rollups.

2.1 Definitions

• R: Set of rollups on Ethereum.

• T: Set of transactions, where Ti,j is the j-th transaction on rollup Ri.

• Pd: Decentralized common pool.

• K: Set of cryptographic keys associated with transactions.

• τ : Timestamp attached to each transaction when it’s accepted by the majority of Pd nodes.

• B: Buffer zone where transactions with pending dependencies are stored.

• τmax: Maximum time a transaction can reside in buffer B.

• Bmax: Maximum number of transactions that can reside in buffer B.

• Dmax: Maximum number of attempts to resolve a transaction’s dependencies.

2.2 Operations

2.2.1 Publish

• Description: This operation ensures that every transaction Ti,j from rollup Ri is published to the Decentralized Common Pool Pd.

• Steps:

1. Ri generates a transaction Ti,j .

2. Ri sends Ti,j to Pd.

3. On receiving Ti,j , the majority of nodes in Pd timestamp it with τ and store it.

• Output: publish(Ti,j ) → Pd(τ )

2.2.2 Buffer

• Description: Transactions with unmet dependencies are sent to the buffer B until their

dependencies are resolved or they hit one of the defined limits.

• Steps:

1. If Ti,j has unresolved dependencies, it’s directed to B.

2. Ti,j resides in B until either its dependencies are resolved or it breaches one of the constraints (τmax, Bmax, or Dmax).

• Output: buffer(Ti,j ) → B

2.2.3 Resolve

• Description: The operation checks for and resolves dependencies between two transactions, possibly from different rollups.

• Steps:

1. Given two transactions Ti,j and Tk,l, Pd checks for their mutual dependencies.

2. If dependencies exist, Pd attempts resolution based on available data and timestamps.

3. If resolution is successful within the constraints, the transactions proceed. Otherwise, they remain in B or are rejected.

• Output: resolve(Ti,j , Tk,l) → bool

2.2.4 Verify

• Description: Ensures the validity and authenticity of the transaction using cryptographic

keys.

• Steps:

1. For each transaction Ti,j , Pd uses the associated cryptographic key Ki,j to verify its authenticity and integrity.

2. If the verification succeeds, the transaction proceeds. Otherwise, it’s deemed invalid.

• Output: verify(Ti,j , Ki,j ) → bool

2.3 Transaction Processing

For two transactions Ti,j from Ri and Tk,l from Rk:

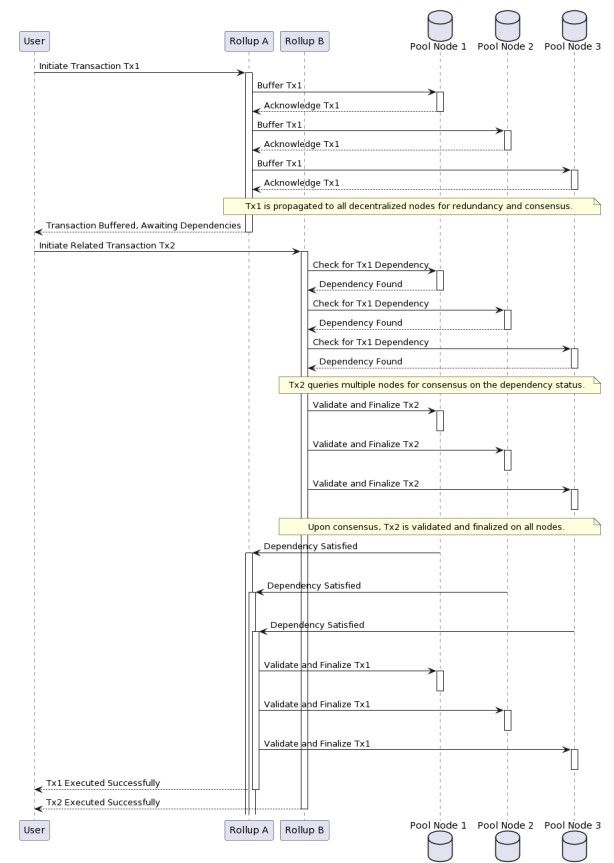

2.3.1 Timestamp-Based Handling

If both transactions are timestamped in Pd and their timestamps are within an acceptable time

difference (delta):

Then, the transactions are deemed compatible and can be processed without buffering.

2.3.2 Buffering

• If the timestamp difference exceeds the acceptable delta, or if there’s a dependency which

isn’t yet satisfied, transactions are sent to B.

• While in B:

– Periodic checks are done to see if dependencies can now be resolved.

– If (τcurrent − τ (Ti,j )) > τmax, Ti,j is rejected.

– If the buffer size exceeds Bmax, a rejection policy PR is triggered to create space.

– If attempts to resolve dependencies for Ti,j exceed Dmax, Ti,j is rejected.

2.3.3 Rejection and Notification

Once a transaction is rejected, a notification mechanism informs the originating rollup Ri or the respective party about the rejection. This allows for potential re-submission or other actions from the user’s end.

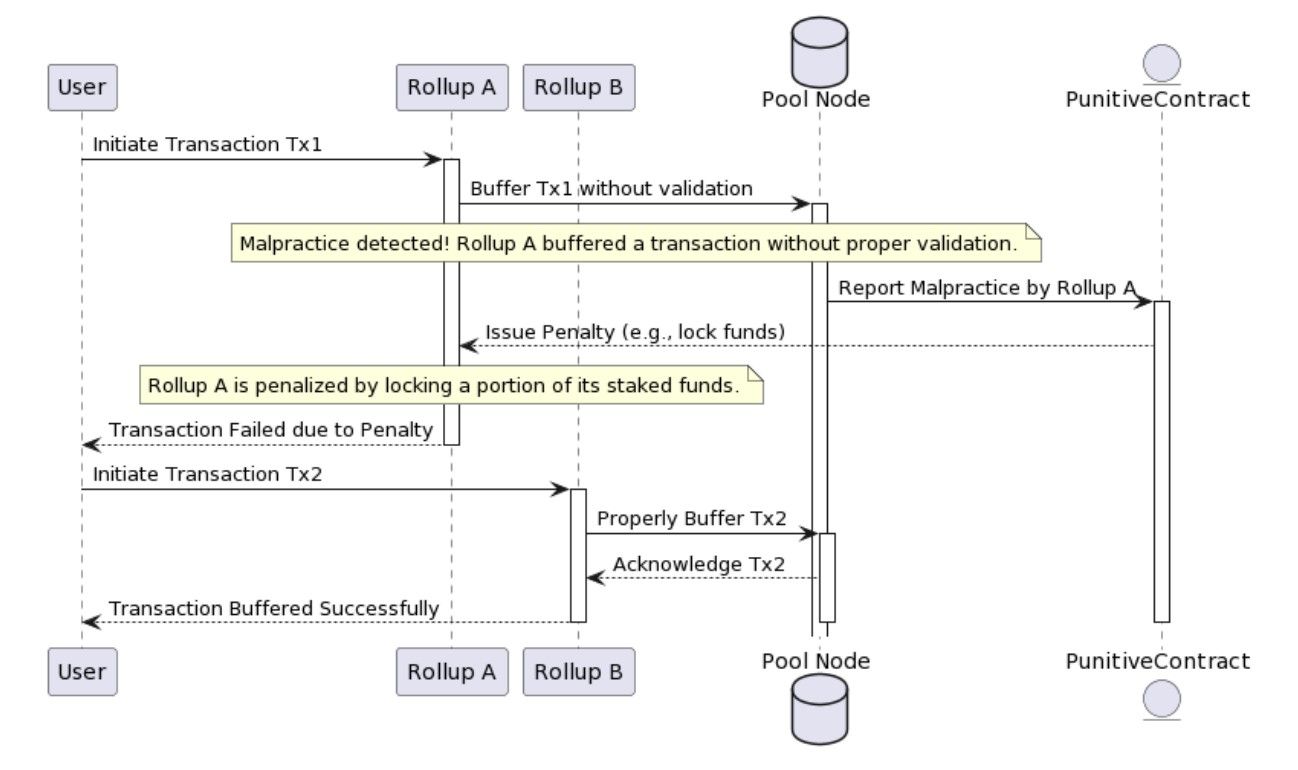

2.4 Rollup Punitive Measures

2.4.1 Staking Mechanism

Every rollup must stake a certain number of tokens to participate in the transaction composability

system. This stake acts as collateral that can be forfeited or slashed if the rollup misbehaves.

2.4.2 Monitoring and Reporting

Third-party nodes or participants (often called "watchers" or "validators") can monitor transactions and execution behaviors across rollups. If they detect a rollup executing a transaction without proper validation or not adhering to the model’s rules, they can submit a proof of this misbehavior.

2.4.3 Misbehavior Proof

A system can be put in place to accept proofs of misbehavior. Once verified, punitive measures can be enacted on the misbehaving rollup, including forfeiting their staked tokens.

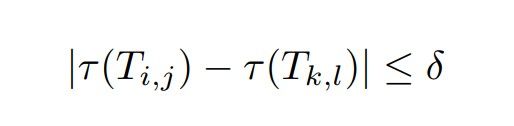

2.5 Decentralized Common Pool (DCP)

Instead of having a centralized common pool, we can utilize a decentralized common pool, Pd. This pool would be maintained and updated by a network of nodes, ensuring redundancy and security. Utilizing a consensus mechanism, these nodes can agree on the state of the pool.

2.5.1 Core features

• Consensus Mechanism: Nodes in the Pd network use a consensus mechanism (like PoS, PoA, or a Byzantine Fault Tolerance mechanism) to validate and agree on the state of the pool. This prevents any single entity from maliciously modifying the data.

• Data Redundancy: The decentralized nature ensures that multiple copies of the transaction pool are stored across nodes. This redundancy makes it resistant to single points of failure and data tampering.

• Cryptographic Verification: Apart from using cryptographic keys for transaction verification, node participation, data propagation, and pool updates in the Pd network are also secured using cryptographic techniques, ensuring data integrity and authenticity.

2.5.2 Concurrency Handling with Transaction Delay/Rejection

• Timestamping: Each transaction that’s published to Pd is timestamped. This timestamp is based on when the transaction is received by the majority of nodes in the Pd network.

• Transaction Buffer: Transactions that have dependencies across rollups are not immediately processed. Instead, they’re placed in a buffer zone.

• Dependency Resolution with Buffer: During the resolution phase, the system checks the buffer to resolve dependencies. If Transaction Ti,j depends on Transaction Tk,l, the system will:

– Check if both transactions are in the buffer and if their timestamps are within an acceptable time difference (delta).

– If they are, the system processes both transactions.

– If not, and if the dependency can’t be resolved within a certain timeframe, the system might either:

1. Reject one or both of the transactions.

2. Delay the transaction processing until the dependency is resolved or a timeout occurs.

2.5.3 Challenges

With the incorporation of a decentralized common pool, timestamping, a transaction buffer for dependency resolution, and enhanced security measures, the solution addresses many of the concurrency and security concerns. However, it’s essential to consider trade-offs:

• The system might have increased complexity due to decentralization and consensus mechanisms.

• Transaction delays, while managing concurrency, might not be suitable for applications that require real-time processing.

• Ensuring all nodes in the Pd network are updated in near real-time might present scalability challenges.